Conference Paper | 2024

Pretraining with random noise for fast and robust learning without weight transport

Jeonghwan Cheon, Sang Wan Lee, Se-Bum Paik

Abstract

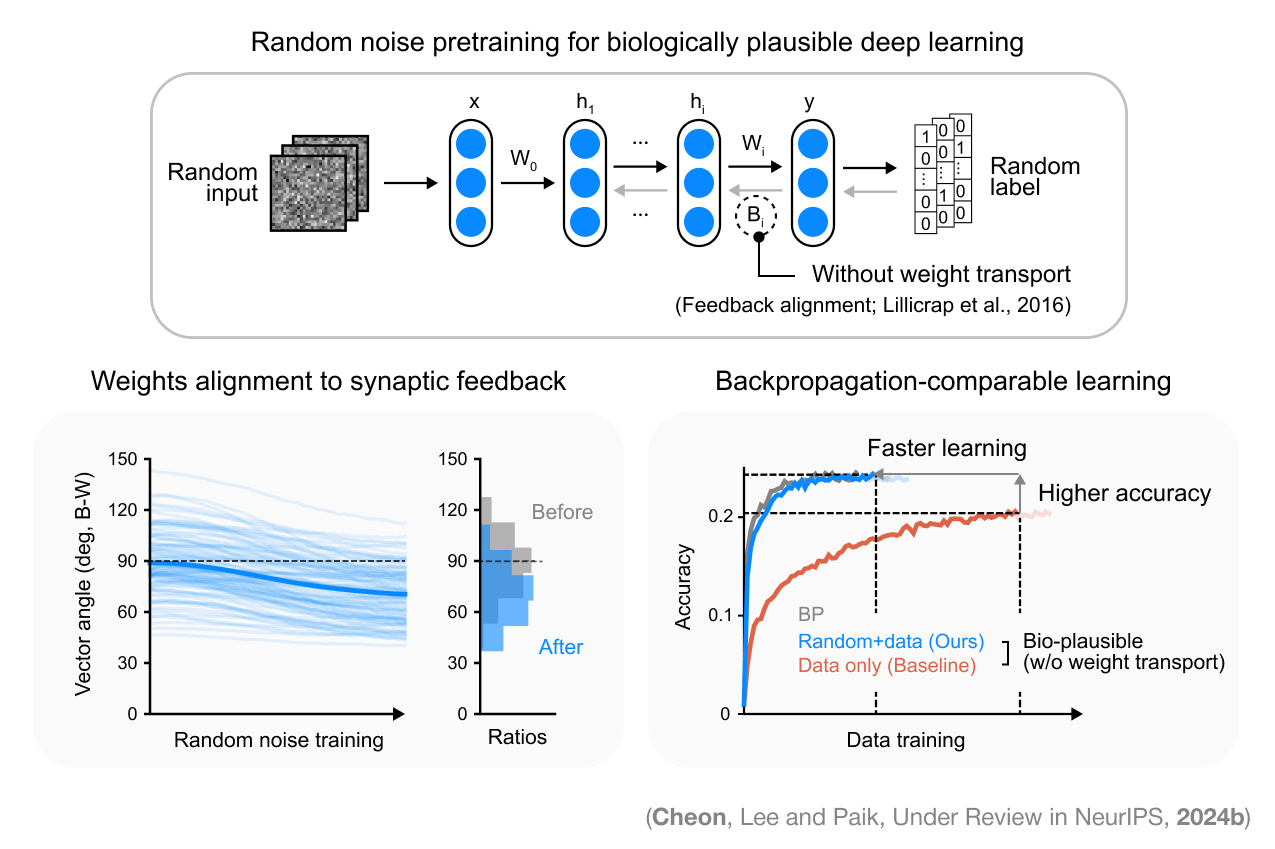

The brain prepares for learning even before interacting with the environment, by refining and optimizing its structures through spontaneous neural activity that resembles random noise. However, the mechanism of such a process has yet to be understood, and it is unclear whether this process can benefit the algorithm of machine learning. Here, we study this issue using a neural network with a feedback alignment algorithm, demonstrating that pretraining neural networks with random noise increases the learning efficiency as well as generalization abilities without weight transport. First, we found that random noise training modifies forward weights to match backward synaptic feedback, which is necessary for teaching errors by feedback alignment. As a result, a network with pre-aligned weights learns notably faster and reaches higher accuracy than a network without random noise training, even comparable to the backpropagation algorithm. We also found that the effective dimensionality of weights decreases in a network pretrained with random noise. This pre-regularization allows the network to learn simple solutions of a low rank, reducing the generalization loss during subsequent training. This also enables the network robustly to generalize a novel, out-of-distribution dataset. Lastly, we confirmed that random noise pretraining reduces the amount of meta-loss, enhancing the network ability to adapt to various tasks. Overall, our results suggest that random noise training with feedback alignment offers a straightforward yet effective method of pretraining that facilitates quick and reliable learning without weight transport.

Highlights

- Weight alignment to synaptic feedback during random noise training

- Pretraining random noise enables fast learning during subsequent data training

- Pre-regularization by random noise training enables robust generalization

- Task-agnostic fast learning for various tasks by a network pretrained with random noise

Publication Information

Preprint and Proceedings

- Pretraining with random noise for fast and robust learning without weight transport (2024)

- Jeonghwan Cheon, Sang Wan Lee, Se-Bum Paik

- arXiv 2405.16731

Conferences

- Neural Information Processing Systems (NeurIPS), Dec 2024, Vancouver, BC, CN.

- Jeonghwan Cheon, Sang Wan Lee, Se-Bum Paik.

- ”Pretraining with random noise for fast and robust learning without weight transport”

- CSHL Meeting—from Neuroscience to Artificially Intelligent Systems (NAISys), Sep 2024, Cold Spring Harbor, NY, US.

- Jeonghwan Cheon, Sang Wan Lee, Se-Bum Paik.

- “Random noise enables reliable learning without weight transport”

Workshop Presentations

- 3rd CSBD International Workshop @ IBS, Dec. 5, 2024, Daejeon, KR.

- Jeonghwan Cheon, Sang Wan Lee, Se-Bum Paik.

- ”Pretraining with Random Noise for Biologically Plausible Deep Learning”

- 2024 Asia-Pacific Computational and Cognitive Neuroscience Conference (AP-CCN), Nov. 22, 2024, Daejeon, KR.

- Jeonghwan Cheon, Sang Wan Lee, Se-Bum Paik.

- ”Pretraining with Random Noise for Boosting Neural Network Learning without Weight Transport”

ⓒ 2024. Jeonghwan Cheon. All Rights Reserved.